There are several aspects I appreciate about the new Razr Ultra 2025, especially the introduction of the AI Key. Initially, I thought this would unlock a range of possibilities and provide easy access to the extensive AI features included in Motorola’s Moto AI suite. Unfortunately, the execution isn’t as strong as it could be, and the AI Key often feels like an afterthought, missing out on the potential to be Motorola’s Action Button.

The shortcomings become even more obvious in an increasingly AI-driven world. While Motorola’s take on AI falls short when compared to Google or Samsung, it still represents a modest first step. That being said, the AI Key holds promise if executed correctly, and both Google and Samsung would be wise to consider a similar feature in their future devices.

Moto AI Limitations

Motorola is attempting to carve out its niche in AI with its Moto AI chatbot, designed to be a conversational agent—albeit a less functional one compared to Google’s Gemini or Samsung’s Bixby. It can engage in dialogue, but its capabilities on the phone are quite limited, undermining the utility of having an AI assistant/chatbot. While Moto AI is a competent option, it still falls short of what Google or Samsung offer.

On the Razr Ultra 2025, users can set the AI Key to activate the Moto AI overlay with a long press. Unfortunately, this is the only option available for a long press, which feels like a major limitation. Despite having multiple potential options, users can only choose Moto AI or nothing at all.

So I’m stuck using Moto AI. That’s manageable, but the challenge arises when I press the AI Key to activate it—I then must hit the mic button to begin talking to Moto AI. This process complicates matters, creating an extra step that feels unnecessary compared to how Gemini operates when triggered via the power button or a corner swipe; it simply starts listening automatically. This added requirement to press another button before speaking feels cumbersome.

This issue may be linked to Moto AI analyzing the content on your display when activated, which helps it make suggestions about your next actions. However, I fail to see why it can’t allow voice interaction while concurrently performing its analysis.

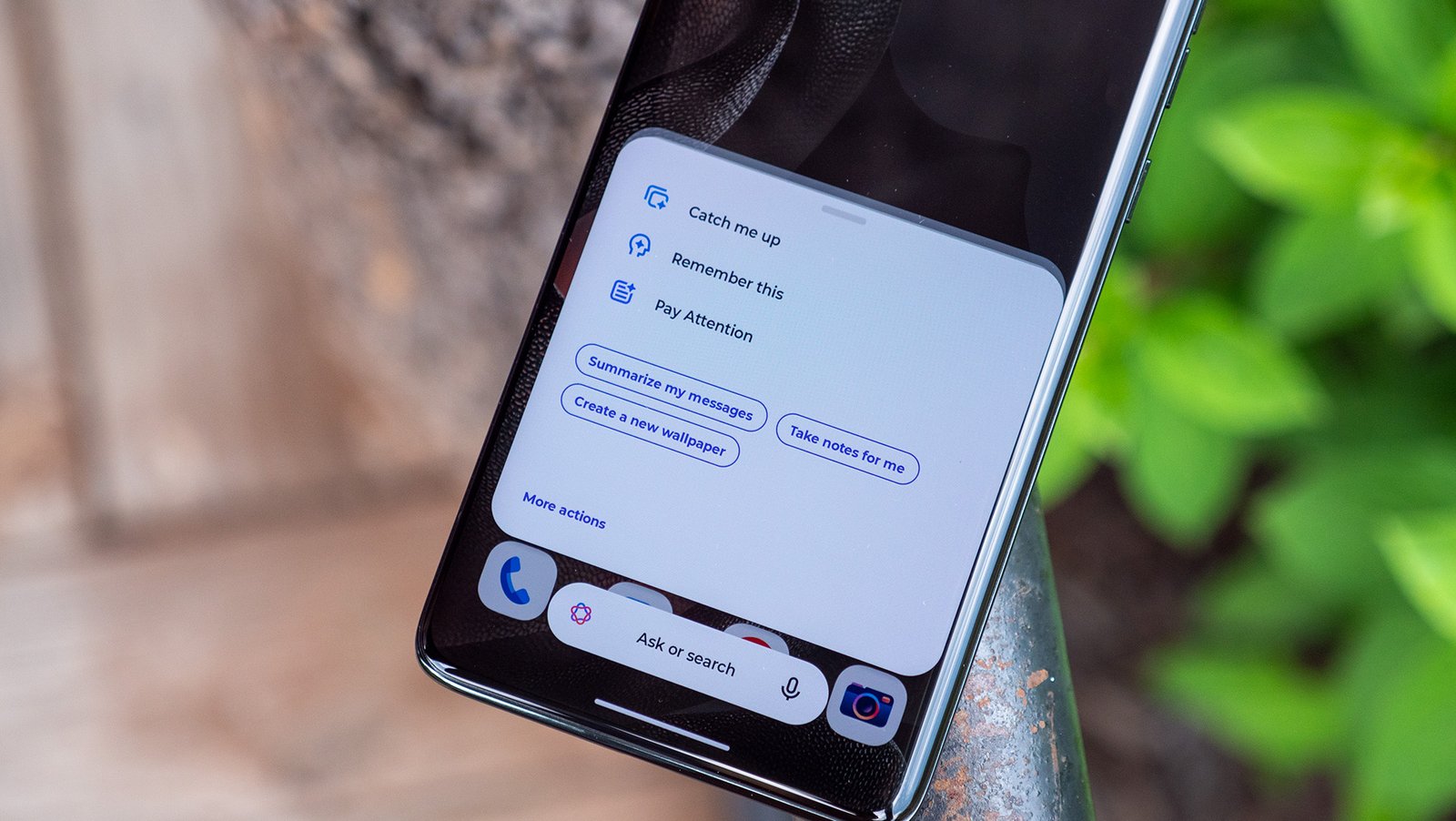

When I engage Gemini while watching a YouTube video, for instance, it recognizes that I’m viewing a video and displays options like “Ask about video” or “Talk Live about video.” I still have the freedom to start speaking automatically when prompted. This alone highlights why the AI Key’s functionality should be expanded beyond just Motorola’s AI capabilities.

Moreover, the only other customization for the AI Key is a double tap, which is limited to activating “Catch Me Up” and “Remember This.” That’s it. Between the double tap and long press, users have access to just three functions through the AI Key, which feels like a waste of hardware.

Samsung Was on the Right Track

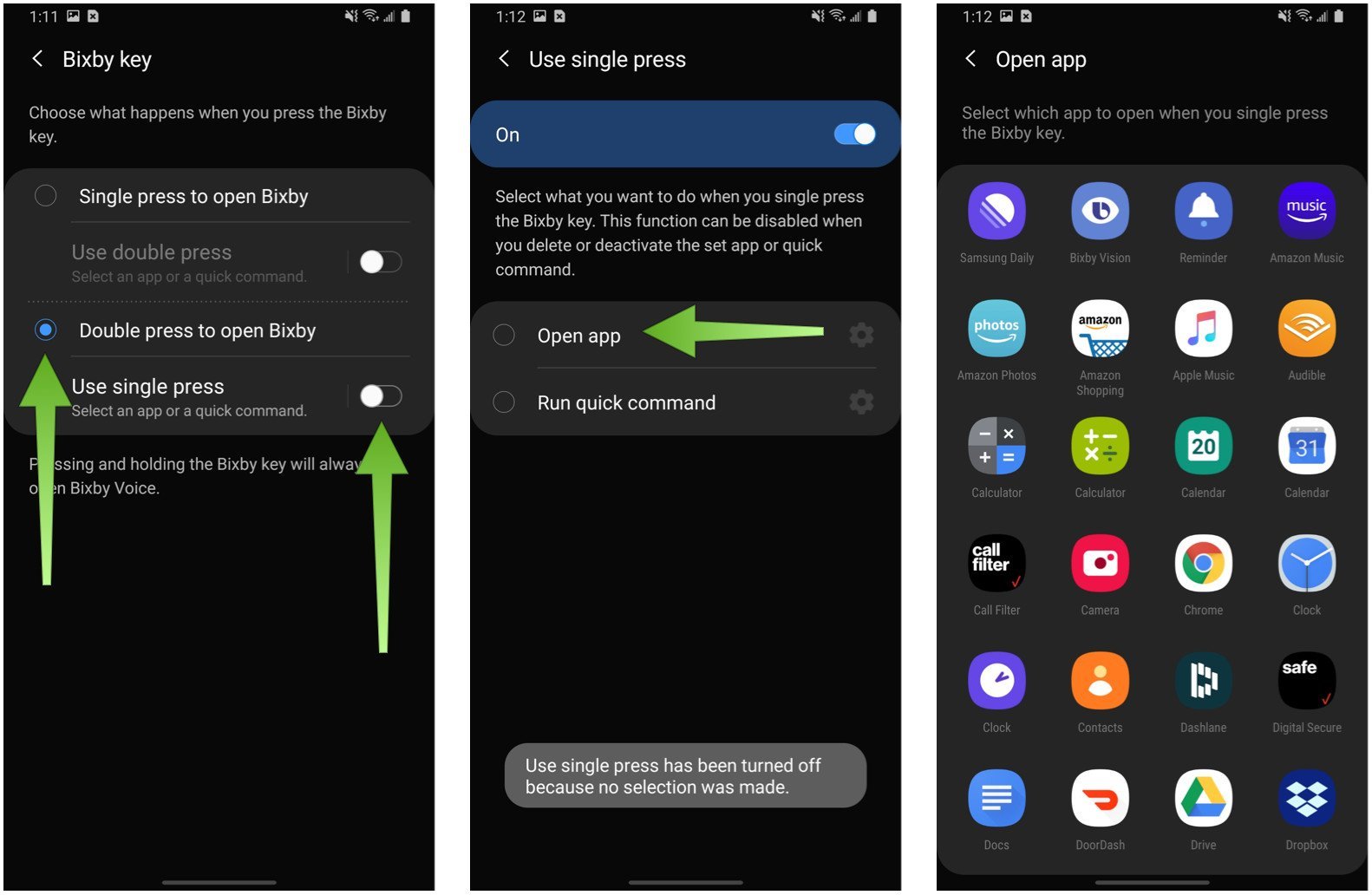

Samsung once featured a dedicated Bixby Key on its phones, which I initially viewed as a forced adoption of Bixby. I wasn’t a fan of this move, as Bixby always seemed to play second fiddle to Google Assistant. However, as AI continues to evolve, I now wish Samsung had retained that button and hope they consider reintroducing it.

Though the Bixby button was originally designed for easy Bixby access, Samsung allowed a degree of customization. For example, users could set one gesture—either a single tap or double tap—to open a preferred app or trigger a Routine, while the other was reserved for Bixby.

Moreover, consumers found ways to bypass Bixby entirely by utilizing third-party apps like Tasker to trigger Google Assistant instead. I’ve looked into similar workarounds for the Razr Ultra 2025, but haven’t had much success yet.

Nonetheless, Samsung had the right approach by offering users options while maintaining the primary function. Other manufacturers have also ventured into the AI button space, with more devices incorporating similar features.

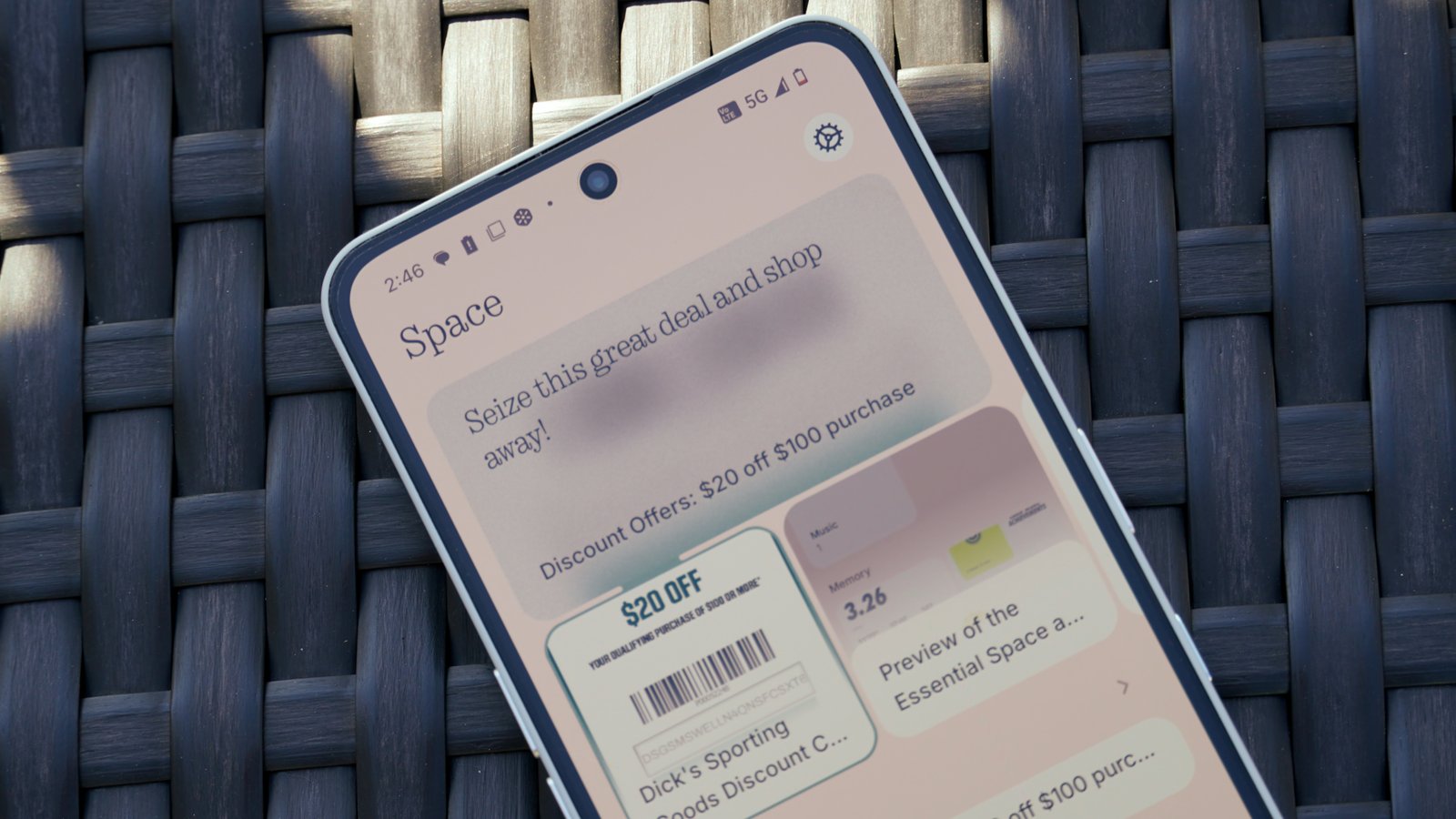

The Nothing Phone 3a and 3a Pro introduced an Essential Key that works closely with their new Essential Space. A single press captures content, a double press starts recording, and a long press opens Essential Space for easy access to saved content.

While not fully customizable, this setup already offers a better user experience than Motorola’s implementation by providing straightforward content access from a single button press, something Motorola lacks. Users are also finding ways to re-map this button, and we can only hope Nothing will open up further customizations soon.

TECNO has also introduced a One-Tap button on devices such as the Camon 40 Premier 5G, which activates its Ella AI assistant. Ironically, a simple tap does nothing, yet a long press activates Ella, and users can customize a double press for launching any app they choose.

Although no one seems to have perfected the functionality of this extra button thus far, Samsung appears to be well-positioned to do so given its past experience with AI buttons. That said, the Pixel would also be an ideal candidate for an AI button.

Perfect for Pixel

Google should strongly consider adding an AI button to Pixel devices. While I’d love to see Samsung reintroduce the AI button (and they certainly should), an AI button would also be incredibly beneficial for Pixel smartphones. Interestingly, my desire for this addition extends beyond just AI functionalities.

In recent years, Android devices—including Pixels—have adopted the power button to trigger AI assistants. To turn off the phone, one now must hold both the power and volume up buttons. This is a departure from the way I’ve been used to using the power button, which previously involved a single button press.

While it’s easy to revert to the traditional method on a Pixel or other devices, I find it irritating when companies repurpose the power button for functions other than powering down. There are alternative ways to invoke Gemini, such as swiping from the corner of the display or double-tapping the back of the phone.

A dedicated AI button would be an ideal solution for launching Gemini on a Pixel. Google could allow users to customize both single and double taps, while locking the long press to access Gemini or Gemini Live. They could even adopt a feature similar to Nothing’s approach to grant users easy access to the Pixel Screenshots app for quick saving and retrieval of on-device content.

With an increasing focus on AI in Pixel devices, this would be a fitting enhancement—provided Google gets it right. The Razr Ultra 2025 had a promising opportunity with its AI Key, but it has missed the mark by limiting its functionality and presenting an underwhelming Moto AI option. Google, along with Samsung, should take lessons from Motorola and others to improve their implementations and liberate the power button from its AI constraints.