The market for smart glasses is undeniably booming. During the Augmented World Expo 2025 panels discussing the future of smart glasses, speakers expressed an optimistic outlook. Yet, when directed specifically towards augmented reality displays in glasses, industry experts from Google, Meta, and other tech giants presented a variety of complex and divergent visions for the future.

During discussions such as “What does it take to achieve mass market adoption of AR products?”, representatives from Google, Meta, and Qualcomm focused heavily on artificial intelligence and raised questions about whether “AR glasses” even require a visual display.

They reassured the audience that AR glasses would inevitably become commonplace, potentially replacing our smartphones in future generations. However, their approaches to achieving the success that smart glasses have seen differed significantly, particularly since smart glasses are generally more affordable and have a naturally stylish appeal.

Currently, AR glasses are carving out a space as extended displays within gaming and streaming markets. Notable demonstrations at AWE featured enhanced field of view (FoV) and color improvements from companies like Viture and XREAL AR glasses.

However, beyond at-home entertainment, tech giants envision AR glasses as fashionable, all-day accessories for everyday consumers. Achieving this goal presents a formidable challenge.

Here’s what I gathered from the AWE 2025 panels regarding the future evolution of AR glasses, including the starkly different perspectives held by Meta and Google.

AR glasses lack a singular roadmap for success akin to smart glasses

During her discussion titled “State of Smart Glasses and What’s on the Horizon,” Meta VP of AR Devices Kelly Ingham addressed the evident hurdles facing AR glasses today.

“Displays remain quite heavy, right? How can you place a display in front of your eyes while ensuring comfort?” she noted rhetorically. “Should it be monocular or binocular? Additional weight translates to increased costs.”

XREAL’s Ralph Jodice, whose company recently launched Android XR-powered AR glasses, mentioned they have successfully expanded their FoV from 30º to 57º recently, with plans to reach 70º soon. However, customer feedback indicates that the primary demand is “wider FoV and smaller glasses,” which poses clear challenges.

Ingham concluded that there will be a “wide array of categories” for AR glasses, ranging from 30-degree displays for notifications to 70-degree displays for immersive entertainment, incorporating cloud-based AI through smartphones to an “entire computer on your head.” There is no single avenue to success.

Bernard Kress, Director of Google AR, voiced a similar sentiment on the panel regarding the mass market adoption of AR products. He outlined a spectrum of potential AR “market segments”:

- Monocolor AR glasses with basic, subtle text functionality

- Full-color single-display glasses such as the Google AR prototype

- Binocular, full FoV glasses like Meta Orion or Snap Spectacles with increased power requirements and larger form factors

- Cost-effective “smart goggles” like XREAL One, which do not need to mimic traditional glasses

- Full optical see-through (OST) headsets like HoloLens

Kress did not mention that this vagueness complicates envisioning the future of AR glasses, making it too intricate for non-technical individuals to grasp.

Smart glasses also come in a variety of form factors. Yet many consumers tend to recognize Ray-Ban Meta glasses as the prototype for smart glasses: featuring built-in speakers, cameras, AI assistance, and smartphone connectivity within a “normal” glasses design.

We can expect a broader diversity of designs with the anticipated Oakley Meta glasses or Android XR glasses developed by Warby Parker and Gentle Monster, although the fundamental experience should remain consistent and appealing to the average consumer.

Google contends AR requires a killer app for success

During the panel discussion, the moderator posed a question to Kress and representatives from Meta and Qualcomm: “Is a display necessary for smart glasses to qualify as AR?”

Edgar Auslander, a Senior Director at Meta, responded, “Not necessarily.” He argued that as long as “reality is augmented,” it could be achieved through audio, visual, or other sensory modifications. By that measure, Meta’s AI glasses are classified as “AR glasses,” though that raises some concerns.

Kress offered a differing viewpoint, insisting that AR glasses require a display that delivers “something that cannot simply be replaced by audio.” He suggested that multimodal AI—a potential game-changer for smart glasses, capable of analyzing visual phenomena—could benefit from a display, yet it could function without one.

Kress, who previously worked on the original Google Glass, remarked that once the initial novelty faded, consumers found “no real use for it.” This led to Glass’s pivot towards enterprise applications, where developers could achieve clear “return on investment (ROI)” in sectors such as automotive, avionics, surgery, and architecture.

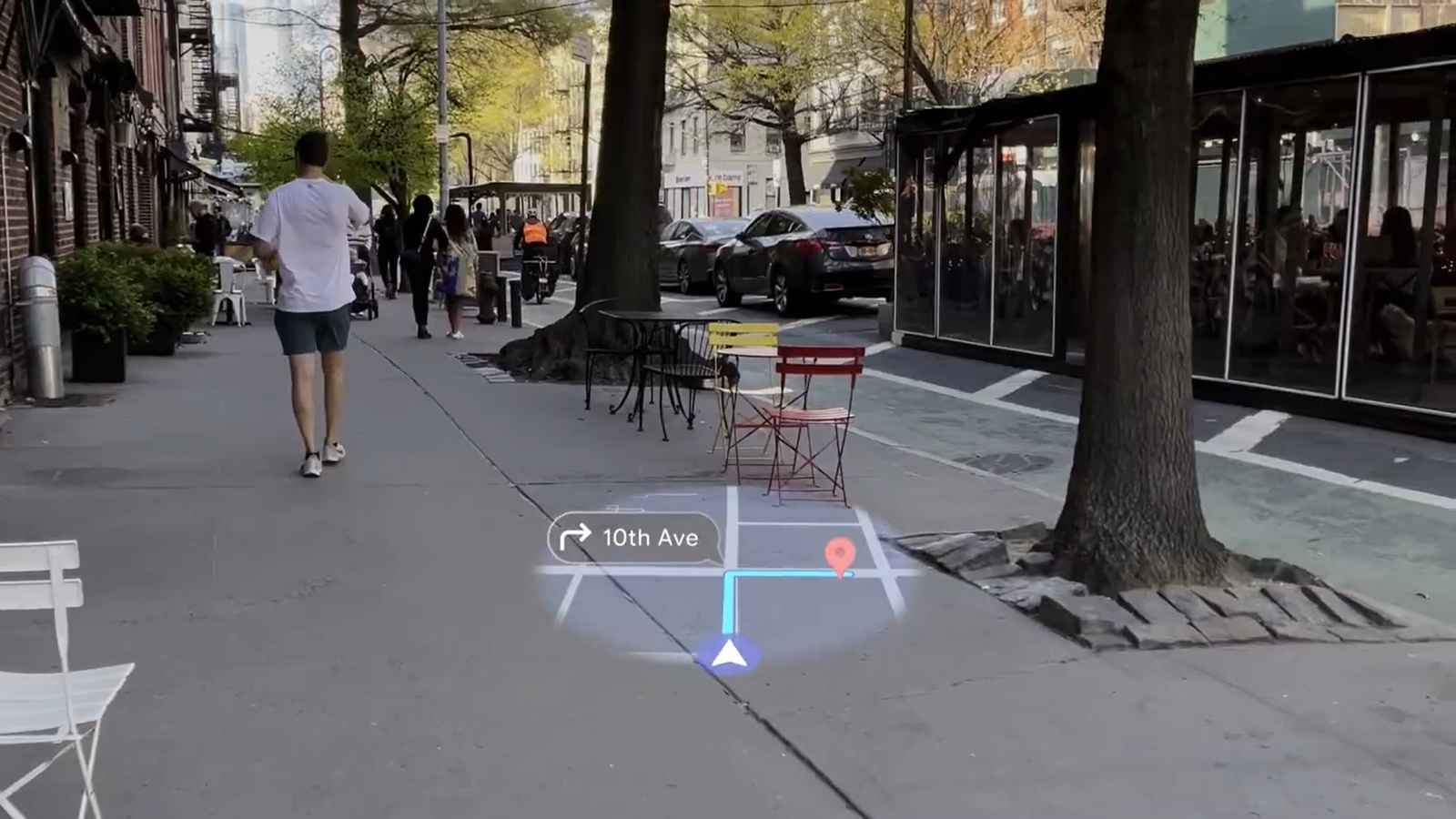

What shifts will occur with AR glasses moving forward? Kress believes the visual component becomes essential when achieving “World Locking,” which allows for accurate six degrees of freedom (6DoF) tracking and a visual positioning system that references specific, physical elements in the real world. At present, the Android XR interface can only hover in open spaces.

He emphasized the necessity for affordable, energy-efficient microLEDs to effectively display data. However, he asserts that software remains the critical factor. It’s a familiar stance from a Google engineer, considering the company’s focus on Android XR while delegating hardware responsibilities to Samsung and others.

From a more hardware-focused and economic standpoint, Meta’s Ingham argued that smart glasses need to gain mainstream traction first, or AR glasses will struggle.

Meta has sold two million pairs of smart glasses, and Ingham emphasizes that overall sales need to “better be in double digits”—indicating tens of millions—by 2027; otherwise, it signals a troubling sign for the category.

She elaborated on how original equipment manufacturers (OEMs) like Apple and Google need to collaborate with Meta on consumer smart glasses to encourage retailers such as Best Buy and Target to view smart glasses as a “comprehensive category.” This way, they could “start promoting these products” with heightened marketing efforts.

Once consumers widely adopt AI glasses and are “trained” to use audio AI interfaces, Ingham believes they will “start expecting more,” preparing the market for more advanced AR glasses. However, she suggests this shift won’t occur until “after 2030.”

Ingham elaborates that this is why Meta is dedicated to developing early prototypes like Meta Orion at this point to prepare for future stages of market evolution. They’re addressing key pain points of smart glasses, such as weight, audio quality, a greater variety of styles (particularly catering to women), and reduced costs.

However, one significant concern she chose not to address was battery life, which I found noteworthy. No participant in AWE could clearly explain how fully featured AR glasses can be compact enough to attract mainstream consumers while also supporting all-day battery life.

Currently, Android XR is limited to Project Moohan, but Google plans to expand support for AR and smart glasses software in late 2025. Meanwhile, Meta has opened its VR software with Horizon OS but does not intend to unlock its glasses operating system for developers just yet.

XREAL’s Jodice, a key partner of Android XR, argues that an open system with easy access to an “ecosystem of devices” should lure developers to kickstart AR app development.

In contrast, Meta’s Ingham countered that their priority remains to bring “enough devices to market” and establish “better systems” first. She asserts, “When you reach ten million devices, you can develop an app and actually generate revenue, right?”

Android XR may establish a cohesive XR glasses ecosystem that simplifies the creation of applications for developers to target multiple products simultaneously. However, these devices must first come to fruition for developers to reap returns on investment (ROI). While larger developers might seize the opportunity to engage with Android XR from the outset, smaller developers might prefer to await Samsung’s production of a tangible smart glasses product.